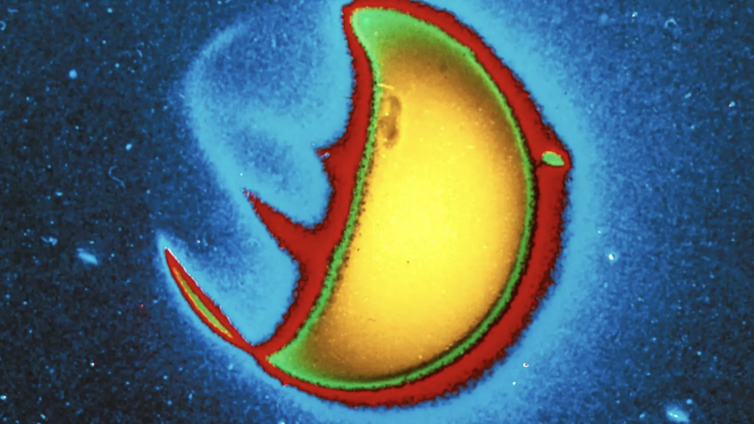

Even at a distance of 93 million miles (150 million kilometers) away, activity on the Sun can have adverse effects on technological systems on Earth. Solar flares – intense bursts of energy in the Sun’s atmosphere – and coronal mass ejections – eruptions of plasma from the Sun – can affect the communications, satellite navigation and power grid systems that keep society functioning.

On Sept. 24, 2025, NASA launched two new missions to study the influence of the Sun on the solar system, with further missions scheduled for 2026 and beyond.

I’m an astrophysicist who researches the Sun, which makes me a solar physicist. Solar physics is part of the wider field of heliophysics, which is the study of the Sun and its influence throughout the solar system.

The field investigates the conditions at a wide range of locations on and around the Sun, ranging from its interior, surface and atmosphere, and the constant stream of particles flowing from the Sun – called the the solar wind. It also investigates the interaction between the solar wind and the atmospheres and magnetic fields of planets.

The importance of space weather

Heliophysics intersects heavily with space weather, which is the influence of solar activity on humanity’s technological infrastructure.

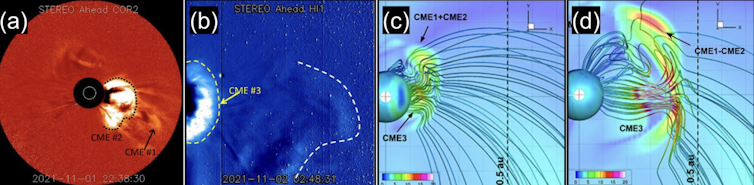

In May 2024, scientists observed the strongest space weather event since 2003. Several Earth-directed coronal mass ejections erupted from the Sun, causing an extreme geomagnetic storm as they interacted with Earth’s magnetic field.

This event produced a beautiful light show of the aurora across the world, providing a view of the northern and southern lights to tens of millions of people at lower latitudes for the first time.

However, geomagnetic storms come with a darker side. The same event triggered overheating alarms in power grids around the world, and triggered a loss in satellite navigation that may have cost the U.S. agricultural industry half a billion dollars.

However, this is far from the worst space weather event on record, with stronger events in 1989 and 2003 knocking out power grids in Canada and Sweden.

But even those events were small compared with the largest space weather event in recorded history, which took place in September 1859. This event, considered the worst-case scenario for extreme space weather, was called the Carrington Event. The Carrington Event produced widespread aurora, visible even close to the equator, and caused disruption to telegraph machines.

If an event like the Carrington event occurred today, it could cause widespread power outages, losses of satellites, days of grounded flights and more. Because space weather can be so destructive to human infrastructure, scientists want to better understand these events.

NASA’s heliophysics missions

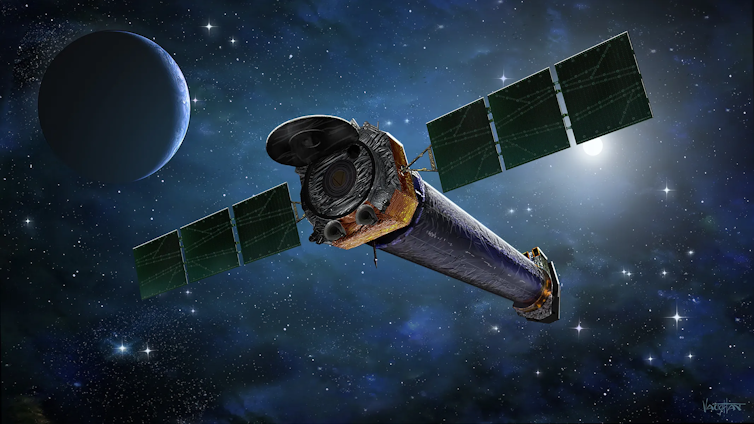

NASA has a vast suite of instruments in space that aim to better understand our heliosphere, the region of the solar system in which the Sun has significant influence. The most famous of these missions include the Parker Solar Probe, launched in 2018, the Solar Dynamics Observatory, launched in 2010, the Solar and Heliospheric Observatory, launched in 1995, and the Polarimeter to Unify the Corona and Heliosphere, launched on March 11, 2025.

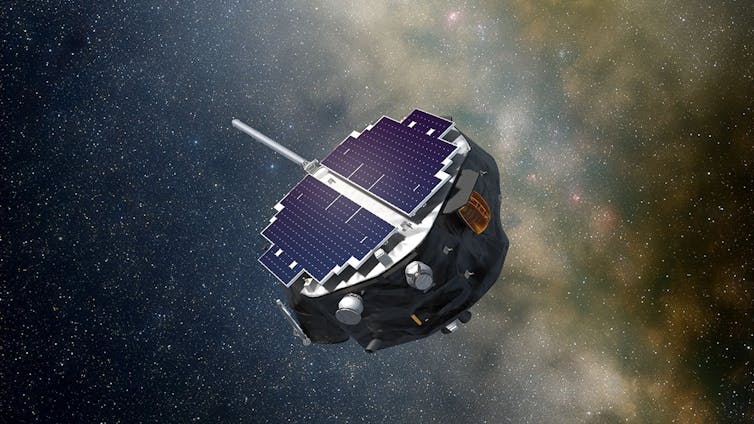

The most recent additions to NASA’s collection of heliophysics missions launched on Sept. 24, 2025: Interstellar Mapping and Acceleration Probe, or IMAP, and the Carruthers Geocorona Observatory. Together, these instruments will collect data across a wide range of locations throughout the solar system.

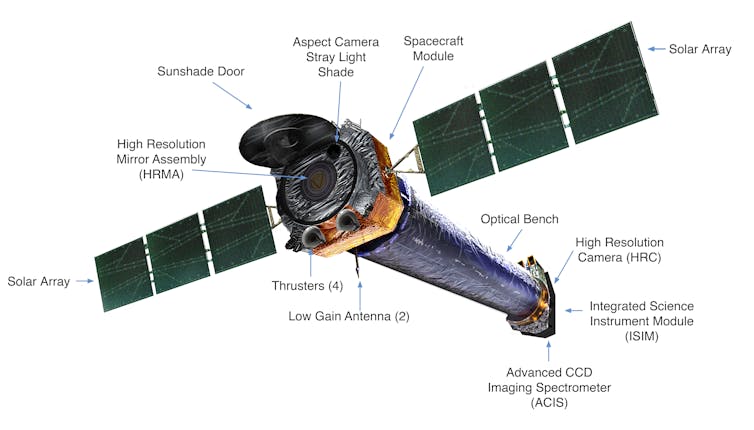

IMAP is en route to a region in space called Lagrange Point 1. This is a location 1% closer to the Sun than Earth, where the balancing gravity of the Earth and Sun allow spacecraft to stay in a stable orbit.

IMAP contains 10 scientific instruments with varying science goals, ranging from measuring the solar wind in real time to improve forecasting of space weather that could arrive at Earth, to mapping the outer boundary between the heliosphere and interstellar space.

This latter goal is unique, something scientists have never attempted before. It will achieve this goal by measuring the origins of energetic neutral atoms, a type of uncharged particle. These particles are produced by plasma, a charged gas of electrons and protons, throughout the heliosphere. By tracking the origins of incoming energetic neutral atoms, IMAP will build a map of the heliosphere.

The Carruthers Geocorona Observatory is heading to the same Lagrange-1 orbit as IMAP, but with a very different science target. Instead of mapping all the way to the very edge of the heliosphere, the Carruthers Geocorona Observatory is observing a different target – Earth’s exosphere. The exosphere is the uppermost layer of Earth’s atmosphere, 375 miles (600 kilometers) above the ground. It borders outer space.

Specifically, the mission will observe ultraviolet light emitted by hydrogen within the exosphere, called the geocorona. The Carruthers Geocorona Observatory has two primary objectives. The first relates directly to space weather.

The observatory will measure how the exosphere – our atmosphere’s first line of defense from the Sun – changes during extreme space weather events. The second objective relates more to Earth sciences: The observatory will measure how water is transported from Earth’s surface up into the exosphere.

Looking forward

IMAP and the Carruthers Geocorona Observatory are two heliophysics missions researching very different parts of the heliosphere. In the coming years, future NASA missions will launch to measure the object at the center of heliophysics – the Sun.

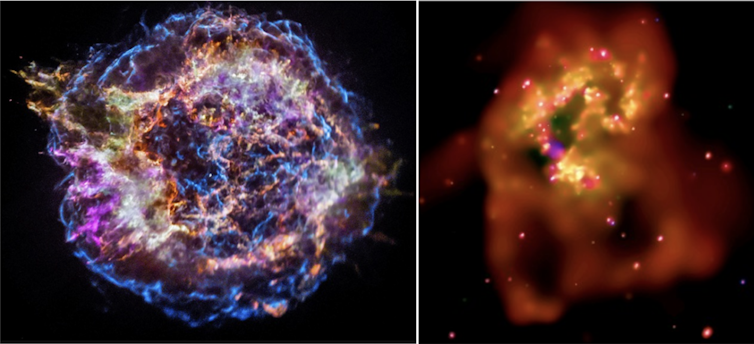

In 2026, the Sun Coronal Ejection Tracker is planned to launch. It is a small satellite the size of a shoebox – called a CubeSat – with the aim to study how coronal mass ejections change as they travel through the Sun’s atmosphere.

In 2027, NASA plans to launch the much larger Multi-slit Solar Explorer to capture high-resolution measurements of the Sun’s corona using a state-of-the-art instrumentation. This mission will work to understand the origins of solar flares, coronal mass ejections and heating within the Sun’s atmosphere.

Ryan French, Research Scientist, Laboratory for Atmospheric and Space Physics, University of Colorado Boulder

This article is republished from The Conversation under a Creative Commons license.