Can cosmology untangle the universe’s most elusive mysteries?

From the Big Bang to dark energy, knowledge of the cosmos has sped up in the past century — but big questions linger

“The first thing we know about the universe is that it’s really, really big,” says cosmologist Michael Turner, who has been contemplating this reality for more than four decades now. “And because the universe is so big,” he says, “it’s often beyond the reach of our instruments, and of our ideas.”

Certainly our current understanding of the cosmic story leaves some huge unanswered questions, says Turner, an emeritus professor at the University of Chicago and a visiting faculty member at UCLA. Take the question of origins. We now know that the universe has been expanding and evolving for something like 13.8 billion years, starting when everything in existence exploded outward from an initial state of near-infinite temperature and density — a.k.a. the Big Bang. Yet no one knows for sure what the Big Bang was, says Turner. Nor does anyone know what triggered it, or what came beforehand — or whether it’s even meaningful to talk about “time” before that initial event.

Then there’s the fact that the most distant stars and galaxies our telescopes can potentially see are confined to the “observable” universe: the region that encompasses objects such as galaxies and stars whose light has had time to reach us since the Big Bang. This is an almost inconceivably vast volume, says Turner, extending tens of billions of light-years in every direction. Yet we have no way of knowing what lies beyond. Just more of the same, perhaps, stretching out to infinity. Or realms that are utterly strange — right down to laws of physics that are very different from our own.

But then, as Turner explains in the 2022 Annual Review of Nuclear and Particle Science, mysteries are only to be expected. The scientific study of cosmology, the field that focuses on the origins and evolution of the universe, is barely a century old. It has already been transformed more than once by new ideas, new technologies and jaw-dropping discoveries — and there is every reason to expect more surprises to come.

Knowable Magazine recently spoke with Turner about how these transformations occurred and what cosmology’s future might be. This interview has been edited for length and clarity.

You say in your article that modern, scientific cosmology didn’t get started until roughly the 1920s. What happened then?

It’s not as though nothing happened earlier. People have been speculating about the origin and evolution of the universe for as long as we know of. But most of what was done before about 100 years ago we would now call galactic astronomy, which is the study of stars, planets and interstellar gas clouds within our own Milky Way. At the time, in fact, a lot of astronomers argued that the Milky Way was the universe — that there was nothing else.

But two big things happened in the 1920s. One was the work of a young astronomer named Edwin Hubble. He took an interest in the nebulae, which were these fuzzy patches of light in the sky that astronomers had been cataloging for hundreds of years. There had always been a debate about their nature: Were they just clouds of gas relatively close by in the Milky Way, or other “island universes” as big as ours?

Nobody had been able to figure that out. But Hubble had access to a new 100-inch telescope, which was the largest in the world at that time. And that gave him an instrument powerful enough to look at some of the biggest and brightest of the nebulae, and show that they contained individual stars, not just gas. By 1925, he was also able to estimate the distance to the very brightest nebula, in the constellation of Andromeda. It lay well outside the Milky Way. It was a whole other galaxy just like ours.

So that paper alone solved the riddle of the nebulae and put Hubble on the map as a great astronomer. In today’s terms, he had identified the fundamental architecture of the universe, which is that it consists of these collections of stars organized into galaxies like our own Milky Way — about 200 billion of them in the part of the universe we can see.

But he didn’t stop there. In those days there was this — well, “war” is probably too strong a word, but a separation between the astronomers who took pictures and the astrophysicists who used spectroscopy, which was a technique that physicists had developed in the 19th century to analyze the wavelengths of light emitted from distant objects. Once you started taking spectra of things like stars or planets, and comparing their emissions with those from known chemical elements in the laboratory, you could say, “Oh, not only do I know what it’s made of, but I know its temperature and how fast it’s moving towards or away from us.” So you could start really studying the object.

Just like in other areas of science, though, the very best people in astronomy use all the tools at hand, be they pictures or spectra. In Hubble’s case, he paid particular attention to an earlier paper that had used spectroscopy to measure the velocity of the nebulae. Now, the striking thing about this paper was that some of the nebulae were moving away from us at many hundreds of kilometers per second. In spectroscopic terms they had a high “redshift,” meaning that their emissions were shifted toward longer wavelengths than you’d see in the lab.

So in 1929, when Hubble had solid distance data for two dozen galaxies and reasonable estimates for more, he plotted those values against the redshift data. And he got a striking correlation: The further away a galaxy was, the faster it was moving away from us.

This was the relation that’s now known as Hubble’s law. It took a while to figure out what it meant, though.

Why? Did it require a second big development?

Yes. A bit earlier, in 1915, Albert Einstein had put forward his theory of general relativity, which was a complete paradigm shift and reformulation of gravity. His key insight was that space and time are not fixed, as physicists had always assumed, but are dynamic. Matter and energy bend space and time around themselves, and the “force” we call gravity is just the result of objects being deflected as they move around in this curved space-time. As the late physicist John Archibald Wheeler famously said, “Space tells matter how to move, and matter tells space how to curve.”

It took a few years to connect Einstein’s theory with observation. But by the early or mid-1930s, it was clear that what Hubble had discovered was not that galaxies are moving away from us into empty space, but that space itself is expanding and carrying the galaxies along with it. The whole universe is expanding.

And at least a few scientists in the 1930s began to realize that Hubble’s discovery also meant there was a beginning to the universe.

The turning point was probably George Gamow, a Soviet physicist who defected to the US in the 1930s. He had studied general relativity as a student in Leningrad, and knew that Einstein’s equations implied that the universe had expanded from a “singularity” — a mathematical point where time began and the radius of the universe was zero. It’s what we now call the Big Bang.

But Gamow also knew nuclear physics, which he had helped develop before World War II. And around 1948, he and his collaborators started to combine general relativity and nuclear physics into a model of the universe’s beginning to explain where the elements in the periodic table came from.

Their key idea was that the universe started out hot, then cooled as it expanded the way gas from an aerosol can does. This was totally theoretical at the time. But it would be confirmed in 1965 when radio astronomers discovered the cosmic microwave background radiation. This radiation consists of high-energy photons that emerged from the Big Bang and cooled down as the universe expanded, until today they are just 3 degrees Kelvin above absolute zero — which is also the average temperature of the universe as a whole.

In this hot, primordial soup — called ylem by Gamow — matter would not exist in the form it does today. The extreme heat would boil atoms into their constituent components — neutrons, protons and electrons. Gamow’s dream was that nuclear reactions in the cooling soup would have produced all the elements, as neutrons and protons combined to make the nuclei of the various atoms in the periodic table.

But his idea came up short. It took a number of years and a village of people to get the calculations right. But by the 1960s, it was clear that what would come from these nuclear reactions was mostly hydrogen, plus a lot of helium — about 25 percent by weight, exactly what astronomers observed — plus a little bit of deuterium, helium-3 and lithium. Heavier elements such as carbon and oxygen were made later, by nuclear reactions in stars and other processes.

So by the early 1970s, we had the creation of the light elements in a hot Big Bang, the expansion of the universe and the microwave background radiation — the three observational pillars of what’s been called the standard model of cosmology, and what I call the first paradigm.

But you note that cosmologists almost immediately began to shift toward a second paradigm. Why? Was the Big Bang model wrong?

Not wrong — our current understanding still has a hot Big Bang beginning — but incomplete. By the 1970s the idea of a hot beginning was attracting the attention of particle physicists, who saw the Big Bang as a way to study particle collisions at energies you couldn’t hope to reach at accelerators here on Earth. So the field suddenly got a lot bigger, and people started asking questions that suggested the standard cosmology was missing something.

For example, why is the universe so smooth? The intensity and temperature of the microwave background radiation, which is the best measure we have of the whole universe, is almost perfectly uniform in every direction. There’s nothing in Einstein’s cosmological equations that says this has to be the case.

The biggest things in the universe originated from the unimaginably small.

On the flip side, though — why is that cosmic smoothness only almost perfect? After all, the most prominent features of the universe today are the galaxies, which must have formed as gravity magnified tiny fluctuations in the density of matter in the early universe. So where did those fluctuations come from? What seeded the galaxies?

Around this time, evidence had accumulated that neutrons and protons were made of smaller bits — quarks — which meant that the neutron-proton soup would eventually boil, too, becoming a quark soup at the earliest times. So maybe the answers lie in that early quark soup phase, or even earlier.

This is the possibility that led Alan Guth to his brilliant paper on cosmic inflation in 1981.

What is cosmic inflation?

Guth’s idea was that in the tiniest fraction of a second after the initial singularity, according to new ideas in particle physics, the universe ought to undergo a burst of accelerated expansion. This would have been an exponential expansion, far faster than in the standard Big Bang model. The size of the universe would have doubled and doubled and doubled again, enough times to take a subatomic patch of space and blow it up to the scale of the observable universe.

This explained the uniformity of the universe right away, just like if you had a balloon and blew it up until it was the size of the Earth or bigger: It would look smooth. But inflation also explained the galaxies. In the quantum world, it’s normal for things like the number of particles in a tiny region to bounce around. Ordinarily, this averages out to zero and we don’t notice it. But when cosmic inflation produced this tremendous expansion, it blew up these subatomic fluctuations to astrophysical scales, and provided the seeds for galaxy formation.

This result is the poster child for the connection between particle physics and cosmology: The biggest things in the universe — galaxies and clusters of galaxies — originated from quantum fluctuations that were unimaginably small.

You have written that the second paradigm has three pillars, cosmic inflation being the first. What about the other two?

When the details of inflation were being worked out in the early 1980s, people saw there was something else missing. The exponential expansion would have stretched everything out until space was “flat” in a certain mathematical sense. But according to Einstein’s general relativity, the only way the universe could be flat was if its mass and energy content averaged out to a certain critical density. This value was really small, equivalent to a few hydrogen atoms per cubic meter.

But even that was a stretch: Astronomers’ best measurements for the mean density of all the planets, stars and gas in the universe — all the stuff made of atoms — wasn’t even 10 percent of the critical density. (The modern figure is 4.9 percent.) So something else that was not made of atoms had to be making up the difference.

That something turned out to have two components, one of which astronomers had already begun to detect through its gravitational effects. Fritz Zwicky found the first clue back in the 1930s, when he looked at the motions of galaxies in distant clusters. Each of these galactic clusters was obviously held together by gravity, because their galaxies were all close and not flying apart. Yet the velocities Zwicky found were really high, and he concluded that the visible stars alone couldn’t produce nearly enough gravity to keep the galaxies bound. The extra gravity had to be coming from some form of “dark matter” that didn’t shine, but that outweighed the visible stars by a large factor.

Then Vera Rubin and Kent Ford really brought it home in the 1970s with their studies of rotation in ordinary nearby galaxies, starting with Andromeda. They found that the rotation rates were way too fast: There weren’t nearly enough stars and interstellar gas to hold these galaxies together. The extra gravity had to be coming from something invisible — again, dark matter.

Particle physicists loved the dark matter idea, because their unified field theories contained hypothetical particles with names like neutralino, or axion, that would have been produced in huge numbers during the Big Bang, and that had exactly the right properties. They wouldn’t give off light because they had no electric charge and very weak interactions with ordinary matter. But they would have enough mass to produce dark matter’s gravitational effects.

We haven’t yet detected these particles in the laboratory. But we do know some things about them. They’re “cold,” for example, meaning that they move slowly compared to the speed of light. And we know from computer simulations that without the gravity of cold dark matter, those tiny density fluctuations in the ordinary matter that emerged from the Big Bang would never have collapsed into galaxies. They just didn’t have enough gravity by themselves.

So that was the second pillar, cold dark matter. And the third?

As the simulations and the observations improved, cosmologists began to realize that even dark matter was only a fraction of the critical density needed to make the universe flat. (The modern figure is 26.8 percent.) The missing piece was found in 1998 when two groups of astronomers did a very careful measurement of the redshift in distant galaxies, and found that the cosmic expansion was gradually accelerating.

So something — I suggested calling it “dark energy,” and the name stuck — is pushing the universe apart. Our best understanding is that dark energy leads to repulsive gravity, something that is built into Einstein’s general relativity. The crucial feature of dark energy is its elasticity or negative pressure. And further, it can’t be broken into particles — it is more like an extremely elastic medium.

While dark energy remains one of the great mysteries of cosmology and particle physics, it seems to be mathematically equivalent to the cosmological constant that Einstein suggested in 1917. In the modern interpretation, though, it corresponds to the energy of nature’s quantum vacuum. This leads to an extraordinary picture: the cosmic expansion speeding up rather than slowing, all caused by the repulsive gravity of a very elastic, mysterious component of the universe called dark energy. The equally extraordinary evidence for this extraordinary claim has built up ever since and the two teams that made the 1998 discovery were awarded the Nobel Prize in Physics in 2011.

So here is where we are: a flat, critical-density universe comprising ordinary matter at about 5 percent, particle dark matter at about 25 percent and dark energy at about 70 percent. The cosmological constant is still called lambda, the Greek letter that Einstein used. And so the new paradigm is referred to as the lambda-cold dark matter model of cosmology.

So this is your second paradigm — inflation plus cold dark matter plus dark energy?

Yes. And it’s this amazing, glass-half-full, half-empty situation. The lambda-cold dark matter paradigm has these three pillars that are well established with evidence, and that allow us to describe the evolution of the universe from a tiny fraction of a second until today. But we know we’re not done.

For example, you say, “Wow, cosmic inflation sounds really important. It’s why we have a flat universe today and explains the seeds for galaxies. Tell me the details.” Well, we don’t know the details. Our best understanding is that inflation was caused by some still unknown field similar to the Higgs boson discovered in 2012.

Then you say, “Yeah, this dark matter sounds really important. Its gravity is responsible for the formation of all the galaxies and clusters in the universe. What is it?” We don’t know. It’s probably some kind of particle left over from the Big Bang, but we haven’t found it.

“You say, ‘Yeah, this dark matter sounds really important. Its gravity is responsible for the formation of all the galaxies and clusters in the universe. What is it?’ We don’t know.”

And then finally you say, “Oh, dark energy is 70 percent of the universe. That must be really important. Tell me more about it.” And we say, it’s consistent with a cosmological constant. But really, we don’t have a clue why the cosmological constant should exist or have the value it does.

So now cosmology has left us with three physics questions: Dark matter, dark energy and inflation — what are they?

Does that mean we need a third cosmological paradigm to find the answers?

Maybe. It could be that everything’s done in 30 years because we just flesh out our current ideas. We discover that dark matter really is some particle like the axion, that dark energy really is just the constant quantum energy of empty space, and that inflation really was caused by the Higgs field.

But more likely than not, if history is any guide, we’re missing something and there’s a surprise on the horizon.

Some cosmologists are trying to find this surprise by following the really big questions. For example: What was the Big Bang? And what happened beforehand? The Big Bang theory we talked about earlier is anything but a theory of the Big Bang itself; it’s a theory of what happened afterwards.

Remember, the actual Big Bang event, according to Einstein’s general relativity, was this singularity that saw the creation of matter, energy, space and time itself. That’s the big mystery, which we struggle even to talk about in scientific terms: Was there a phase before this singularity? And if so, what was it like? Or, as many theorists think, does the singularity in Einstein’s equations represent the instant when space and time themselves emerged from something more fundamental?

Another possibility that has captured the attention of scientists and public alike is the multiverse. This follows from inflation, where we imagine blowing up a small bit of space to an enormous size. Could that happen more than once, at different places and times? And the answer is yes: You could have had different patches of the wider multiverse inflating into entirely different universes, maybe with different laws of physics in each one. It could be the biggest idea since Copernicus moved us out of the center of the universe. But it’s also very frustrating because right now, it isn’t science: These universes would be completely disconnected, with no way to access them, observe them or show that they actually exist.

Yet another possibility is in the title of my Annual Reviews article: The road to precision cosmology. It used to be that cosmology was really difficult because the instruments weren’t quite up to the task. Back in the 1930s, Hubble and his colleague Milton Humason struggled for years to collect redshifts for a few hundred galaxies, in part because they were recording one spectrum at a time on photographic plates that collected less than 1 percent of the light. Now astronomers use electronic CCD detectors — the same kind that everyone carries around in their phone — that collect almost 100 percent of the light. It’s as if you increased your telescope size without any construction.

And we have projects like the Dark Energy Spectroscopic Instrument on Kitt Peak in Arizona that can collect the spectra of 5,000 galaxies at once — 35 million of them over five years.

So cosmology used to be a data-poor science in which it was hard to measure things within any reliable precision. And today, we are doing precision cosmology, with percent-level accuracy. And further, we are sometimes able to measure things in two different ways, and see if the results agree, creating cross-cuts that can confirm our current paradigm or reveal cracks in it.

A prime example of this is the expansion rate of the universe, what’s called the Hubble parameter — the most important number in cosmology. If nothing else, it tells us the age of the universe: The bigger the parameter, the younger the universe, and vice versa. Today we can measure it directly with the velocities and distances of galaxies out to a few hundred-million light years, at the few percent level.

But there is now another way to measure it with satellite observations of the microwave background radiation, which gives you the expansion rate when the universe was about 380,000 years old, at even greater precision. With the lambda-cold dark matter model you can extrapolate that expansion rate forward to the present day and see if you get the same number as you do with redshifts. And you don’t: The numbers differ by almost 10 percent — an ongoing puzzle that’s called the Hubble tension.

So maybe that’s the loose thread — the tiny discrepancy in the precision measurements that could lead to another paradigm shift. It could be just that the direct measurements of galaxy distances are wrong, or that the microwave background numbers are wrong. But maybe we are finding something that’s missing from lambda-cold dark matter. That would be extremely exciting.

10.1146/knowable-012423-1

This article originally appeared in Knowable Magazine, an independent journalistic endeavor from Annual Reviews.

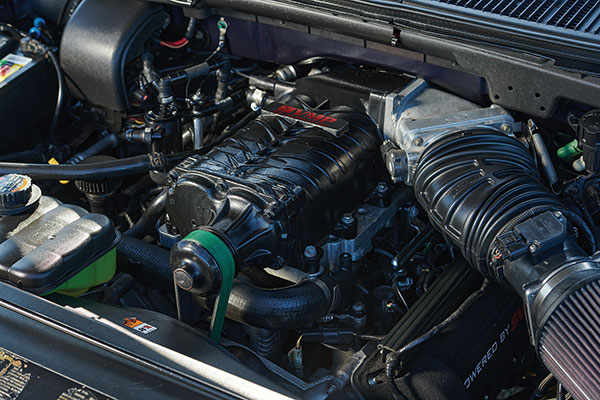

One way to breathe new life into your ride is to take it to the next level aesthetically. With enthusiast communities growing around nearly every make and model of vehicle, it’s easy to find parts to make your vision a reality. One of the most eye-catching additions is a new set of wheels, and there are thousands of brands, styles and sizes to choose from for every car. The addition of front, side and rear aerodynamics kits, such as front splitters or rear spoilers, can give any ride that athletic look. Upgrading stock headlight and taillight units – many fitted with high-visibility LEDs – has never been easier.

One way to breathe new life into your ride is to take it to the next level aesthetically. With enthusiast communities growing around nearly every make and model of vehicle, it’s easy to find parts to make your vision a reality. One of the most eye-catching additions is a new set of wheels, and there are thousands of brands, styles and sizes to choose from for every car. The addition of front, side and rear aerodynamics kits, such as front splitters or rear spoilers, can give any ride that athletic look. Upgrading stock headlight and taillight units – many fitted with high-visibility LEDs – has never been easier.