Scientific highs and lows of cannabinoids

Hundreds of these cannabis-related chemicals now exist, both natural and synthetic, inspiring researchers in search of medical breakthroughs — and fueling a dangerous trend in recreational use

Editor’s note: Raphael Mechoulam passed away on March 9, 2023, at the age of 92.

The 1960s was a big decade for cannabis: Images of flower power, the summer of love and Woodstock wouldn’t be complete without a joint hanging from someone’s mouth. Yet in the early ’60s, scientists knew surprisingly little about the plant. When Raphael Mechoulam, then a young chemist in his 30s at Israel’s Weizmann Institute of Science, went looking for interesting natural products to investigate, he saw an enticing gap in knowledge about the hippie weed: The chemical structure of its active ingredients hadn’t been worked out.

Mechoulam set to work.

The first hurdle was simply getting hold of some cannabis, given that it was illegal. “I was lucky,” Mechoulam recounts in a personal chronicle of his life’s work, published this month in the Annual Review of Pharmacology and Toxicology. “The administrative head of my Institute knew a police officer. ... I just went to Police headquarters, had a cup of coffee with the policeman in charge of the storage of illicit drugs, and got 5 kg of confiscated hashish, presumably smuggled from Lebanon.”

By 1964, Mechoulam and his colleagues had determined, for the first time, the full structure of both delta-9-tetrahydrocannabinol, better known to the world as THC (responsible for marijuana’s psychoactive “high”) and cannabidiol, or CBD.

That chemistry coup opened the door for cannabis research. Over the following decades, researchers including Mechoulam would identify more than 140 active compounds, called cannabinoids, in the cannabis plant, and learn how to make many of them in the lab. Mechoulam helped to figure out that the human body produces its own natural versions of similar chemicals, called endocannabinoids, that can shape our mood and even our personality. And scientists have now made hundreds of novel synthetic cannabinoids, some more potent than anything found in nature.

Today, researchers are mining the huge number of known cannabinoids — old and new, found in plants or people, natural and synthetic — for possible pharmaceutical uses. But, at the same time, synthetic cannabinoids have become a hot trend in recreational drugs, with potentially devastating impacts.

For most of the synthetic cannabinoids made so far, the adverse effects generally outweigh their medical uses says biologist João Pedro Silva of the University of Porto in Portugal, who studies the toxicology of substance abuse, and coauthored a 2023 assessment of the pros and cons of these drugs in the Annual Review of Pharmacology and Toxicology. But, he adds, that doesn’t mean there aren’t better things to come.

Cannabis’s long medical history

Cannabis has been used for centuries for all manner of reasons, from squashing anxiety or pain to spurring appetite and salving seizures. In 2018, a cannabis-derived medicine — Epidiolex, consisting of purified CBD — was approved for controlling seizures in some patients. Some people with serious conditions, including schizophrenia, obsessive compulsive disorder, Parkinson’s and cancer, self-medicate with cannabis in the belief that it will help them, and Mechoulam sees the promise. “There are a lot of papers on [these] diseases and the effects of cannabis (or individual cannabinoids) on them. Most are positive,” he tells Knowable Magazine.

That’s not to say cannabis use comes with zero risks. Silva points to research suggesting that daily cannabis users have a higher risk of developing psychotic disorders, depending on the potency of the cannabis; one paper showed a 3.2 to 5 times higher risk. Longtime chronic users can develop cannabinoid hyperemesis syndrome, characterized by frequent vomiting. Some public health experts worry about impaired driving, and some recreational forms of cannabis contain contaminants like heavy metals with nasty effects .

Finding medical applications for cannabinoids means understanding their pharmacology and balancing their pros and cons.

Mechoulam played a role in the early days of research into cannabis’s possible clinical uses. Based on anecdotal reports stretching back into ancient times of cannabis helping with seizures, he and his colleagues looked at the effects of THC and CBD on epilepsy. They started in mice and, since CBD showed no toxicity or side effects, moved on to people. In 1980, then at the Hebrew University of Jerusalem, Mechoulam co-published results from a 4.5-month, tiny trial of patients with epilepsy who weren’t being helped by current drugs. The results seemed promising: Out of eight people taking CBD, four had almost no attacks throughout the study, and three saw partial improvement. Only one patient wasn’t helped at all.

“We assumed that these results would be expanded by pharmaceutical companies, but nothing happened for over 30 years,” writes Mechoulam in his autobiographical article. It wasn’t until 2018 that the US Food and Drug Administration approved Epidiolex for treating epileptic seizures in people with certain rare and severe medical conditions. “Thousands of patients could have been helped over the four decades since our original publication,” writes Mechoulam.

Drug approval is a necessarily long process, but for cannabis there have been the additional hurdles of legal roadblocks, as well as the difficulty in obtaining patent protections for natural compounds. The latter makes it hard for a pharmaceutical company to financially justify expensive human trials and the lengthy FDA approval process.

In the United Nations’ 1961 Single Convention on Narcotic Drugs, cannabis was slotted into the most restrictive categories: Schedule I (highly addictive and liable to abuse) and its subgroup, Schedule IV (with limited, if any, medicinal uses). The UN removed cannabis from schedule IV only in December 2020 and, although cannabis has been legalized or decriminalized in several countries and most US states, it remains still ( controversially), on both the US’ and the UN’s Schedule I — the same category as heroin. The US’ cannabis research bill, passed into law in December 2022, is expected to help ease some of the issues in working with cannabis and cannabinoids in the lab.

To date, the FDA has only licensed a handful of medicinal drugs based on cannabinoids, and so far they’re based only on THC and CBD. Alongside Epidiolex, the FDA has approved synthetic THC and a THC-like compound to fight nausea in patients undergoing chemotherapy and weight loss in patients with cancer or AIDS. But there are hints of many other possible uses. The National Institutes of Health registry of clinical trials lists hundreds of efforts underway around the world to study the effect of cannabinoids on autism, sleep, Huntington’s Disease, pain management and more.

In recent years, says Mechoulam, interest has expanded beyond THC and CBD to other cannabis compounds such as cannabigerol (CBG), which Mechoulam and his colleague Yehiel Gaoni discovered back in 1964. His team has made derivatives of CBG that have anti-inflammatory and pain relief properties in mice (for example, reducing the pain felt in a swollen paw) and can prevent obesity in mice fed high-fat diets. A small clinical trial of the impacts of CBG on attention-deficit hyperactivity disorder is being undertaken this year. Mechoulam says that the methyl ester form of another chemical, cannabidiolic acid, also seems “very promising” — in rats, it can suppress nausea and anxiety and act as an antidepressant in an animal model of the mood disorder.

But if the laundry list of possible benefits of all the many cannabinoids is huge, the hard work has not yet been done to prove their utility. “It’s been very difficult to try and characterize the effects of all the different ones,” says Sam Craft, a psychology PhD student who studies cannabinoids at the University of Bath in the UK. “The science hasn’t really caught up with all of this yet.”

A natural version in our bodies

Part of the reason that cannabinoids have such far-reaching effects is because, as Mechoulam helped to discover, they’re part of natural human physiology.

In 1988, researchers reported the discovery of a cannabinoid receptor in rat brains, CB1 (researchers would later find another, CB2, and map them both throughout the human body). Mechoulam reasoned there wouldn’t be such a receptor unless the body was pumping out its own chemicals similar to plant cannabinoids, so he went hunting for them. He would drive to Tel Aviv to buy pig brains being sold for food, he remembers, and bring them back to the lab. He found two molecules with cannabinoid-like activity: anandamide (named after the Sanskrit word ananda for bliss) and 2-AG.

These endocannabinoids, as they’re termed, can alter our mood and affect our health without us ever going near a joint. Some speculate that endocannabinoids may be responsible, in part, for personality quirks, personality disorders or differences in temperament.

Animal and cell studies hint that modulating the endocannabinoid system could have a huge range of possible applications, in everything from obesity and diabetes to neurodegeneration, inflammatory diseases, gastrointestinal and skin issues, pain and cancer. Studies have reported that endocannabinoids or synthetic creations similar to the natural compounds can help mice recover from brain trauma, unblock arteries in rats, fight antibiotic-resistant bacteria in petri dishes and alleviate opiate addiction in rats. But the endocannabinoid system is complicated and not yet well understood; no one has yet administered endocannabinoids to people, leaving what Mechoulam sees as a gaping hole of knowledge, and a huge opportunity. “I believe that we are missing a lot,” he says.

“This is indeed an underexplored field of research,” agrees Silva, and it may one day lead to useful pharmaceuticals. For now, though, most clinical trials are focused on understanding the workings of endocannabinoids and their receptors in our bodies (including how everything from probiotics to yoga affects levels of the chemicals).

‘Toxic effects’ of synthetics

In the wake of the discovery of CB1 and CB2, many researchers focused on designing new synthetic molecules that would bind to these receptors even more strongly than plant cannabinoids do. Pharmaceutical companies have pursued such synthetic cannabinoids for decades, but so far, says Craft, without much success — and some missteps. A drug called Rimonabant, which bound tightly to the CB1 receptor but acted in opposition to CB1’s usual effect, was approved in Europe and other nations (but not the US) in the early 2000s to help to diminish appetite and in that way fight obesity. It was withdrawn worldwide in 2008 due to serious psychotic side effects, including provoking depression and suicidal thoughts.

Some of the synthetics invented originally by academics and drug companies have wound up in recreational drugs like Spice and K2. Such drugs have boomed and new chemical formulations keep popping up: Since 2008, 224 different ones have been spotted in Europe. These compounds, chemically tweaked to maximize psychoactive effects, can cause everything from headaches and paranoia to heart palpitations, liver failure and death. “They have very toxic effects,” says Craft.

For now, says Silva, there is scarce evidence that existing synthetic cannabinoids are medicinally useful: As most of the drug candidates worked their way up the pipeline, adverse effects have tended to crop up. Because of that, says Silva, most pharmaceutical efforts to develop synthetic cannabinoids have been discontinued.

But that doesn’t mean all research has stopped; a synthetic cannabinoid called JWH-133, for example, is being investigated in rodents for its potential to reduce the size of breast cancer tumors. It’s possible to make tens of thousands of different chemical modifications to cannabinoids, and so, says Silva, “it is likely that some of these combinations may have therapeutic potential.” The endocannabinoid system is so important in the human body that there’s plenty of room to explore all kinds of medicinal angles. Mechoulam serves on the advisory board of Israel-based company EPM, for example, which is specifically aimed at developing medicines based on synthetic versions of types of cannabinoid compounds called synthetic cannabinoid acids.

With all this work underway on the chemistry of these compounds and their workings within the human body, Mechoulam, now 92, sees a coming explosion in understanding the physiology of the endocannabinoid system. And with that, he says, “I assume that we shall have a lot of new drugs.”

10.1146/knowable-013123-1

This article originally appeared in Knowable Magazine, an independent journalistic endeavor from Annual Reviews.

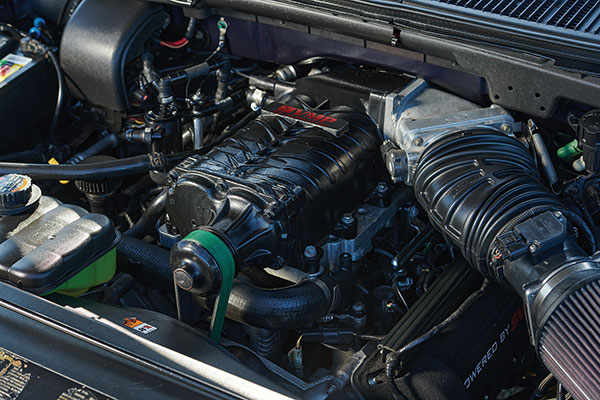

One way to breathe new life into your ride is to take it to the next level aesthetically. With enthusiast communities growing around nearly every make and model of vehicle, it’s easy to find parts to make your vision a reality. One of the most eye-catching additions is a new set of wheels, and there are thousands of brands, styles and sizes to choose from for every car. The addition of front, side and rear aerodynamics kits, such as front splitters or rear spoilers, can give any ride that athletic look. Upgrading stock headlight and taillight units – many fitted with high-visibility LEDs – has never been easier.

One way to breathe new life into your ride is to take it to the next level aesthetically. With enthusiast communities growing around nearly every make and model of vehicle, it’s easy to find parts to make your vision a reality. One of the most eye-catching additions is a new set of wheels, and there are thousands of brands, styles and sizes to choose from for every car. The addition of front, side and rear aerodynamics kits, such as front splitters or rear spoilers, can give any ride that athletic look. Upgrading stock headlight and taillight units – many fitted with high-visibility LEDs – has never been easier.