Cannabis-derived products like delta-8 THC and delta-10 THC have flooded the US market – two immunologists explain the medicinal benefits and potential risks

These days you see signs for delta-8 THC, delta-10 THC and CBD, or cannabidiol, everywhere – at gas stations, convenience stores, vape shops and online. Many people are rightly wondering which of these compounds are legal, whether it is safe to consume them and which of their supposed medicinal benefits hold up to scientific scrutiny.

The rapid proliferation of cannabis products makes clear the need for the public to better understand what these compounds are derived from and what their true benefits and potential risks may be.

We are immunologists who have been studying the effects of marijuana cannabinoids on inflammation and cancer for more than two decades.

We see great promise in these products in medical applications. But we also have concerns about the fact that there are still many unknowns about their safety and their psychoactive properties.

Parsing the differences between marijuana and hemp

Cannabis sativa, the most common type of cannabis plant, has more than 100 compounds called cannabinoids.

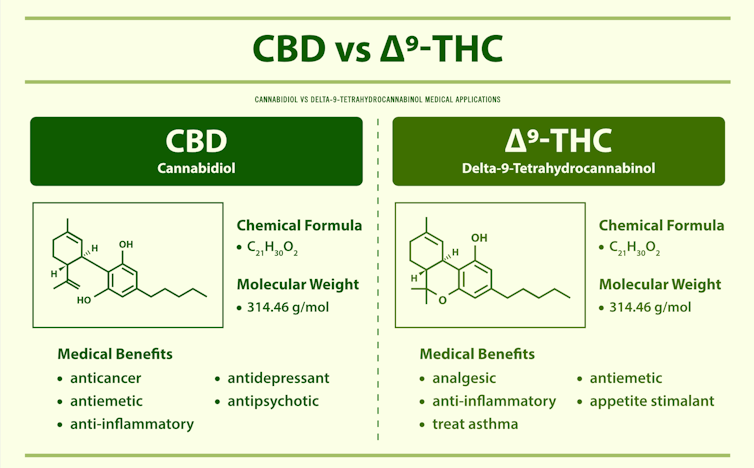

The most well-studied cannabinoids extracted from the cannabis plant include delta-9-tetrahydrocannabinol, or delta-9 THC, which is psychoactive. A psychoactive compound is one that affects how the brain functions, thereby altering mood, awareness, thoughts, feelings or behavior. Delta-9 THC is the main cannabinoid responsible for the high associated with marijuana. CBD, in contrast, is non-psychoactive.

Marijuana and hemp are two different varieties of the cannabis plant. In the U.S., federal regulations stipulate that cannabis plants containing greater than 0.3% delta-9 THC should be classified as marijuana, while plants containing less should be classified as hemp. The marijuana grown today has high levels – from 10% to 30% – of delta-9 THC, while hemp plants contain 5% to 15% CBD.

In 2018, the Food and Drug Administration approved the use of CBD extracted from the cannabis plant to treat epilepsy. In addition to being a source of CBD, hemp plants can be used commercially to develop a variety of other products such as textiles, paper, medicine, food, animal feed, biofuel, biodegradable plastic and construction material.

Recognizing the potential broad applications of hemp, when Congress passed the Agriculture Improvement Act, called the Farm Bill, in 2018, it removed hemp from the category of controlled substances. This made it legal to grow hemp.

When hemp-derived CBD saturated the market after passage of the Farm Bill, CBD manufacturers began harnessing their technical prowess to derive other forms of cannabinoids from CBD. This led to the emergence of delta-8 and delta-10 THC.

The chemical difference between delta-8, delta-9 and delta-10 THC is the position of a double bond on the chain of carbon atoms they structurally share. Delta-8 has this double bond on the eighth carbon atom of the chain, delta-9 on the ninth carbon atom, and delta-10 on the 10th carbon atom. These minor differences cause them to exert different levels of psychoactive effects.

The properties of delta-9 THC

Delta-9 THC was one of the first forms of cannabinoid to be isolated from the cannabis plant in 1964. The highly psychoactive property of delta-9 THC is based on its ability to activate certain cannabinoid receptors, called CB1, in the brain. The receptor, CB1, is like a lock that can be opened only by a specific key – in this case, delta-9 THC – allowing the latter to affect certain cell functions.

Delta-9 THC mimics the cannabinoids, called endocannabinoids, that our bodies naturally produce. Because delta-9 THC emulates the actions of endocannabinoids, it also affects the same brain functions they regulate, such as appetite, learning, memory, anxiety, depression, pain, sleep, mood, body temperature and immune responses.

The FDA approved delta-9 THC in 1985 to treat chemotherapy-induced nausea and vomiting in cancer patients and, in 1992, to stimulate appetite in HIV/AIDS patients.

The National Academy of Sciences has reported that cannabis is effective in alleviating chronic pain in adults and for improving muscle stiffness in patients with multiple sclerosis, an autoimmune disease. That report also suggested that cannabis may help sleep outcomes and fibromyalgia, a medical condition in which patients complain of fatigue and pain throughout the body. In fact, a combination of delta-9 THC and CBD has been used to treat muscle stiffness and spasms in multiple sclerosis. This medicine, called Sativex, is approved in many countries but not yet in the U.S.

Delta-9 THC can also activate another type of cannabinoid receptor, called CB2, which is expressed mainly on immune cells. Studies from our laboratory have shown that delta-9 THC can suppress inflammation through the activation of CB2. This makes it highly effective in the treatment of autoimmune diseases like multiple sclerosis and colitis as well as inflammation of the lungs caused by bacterial toxins.

However, delta-9 THC has not been approved by the FDA for ailments such as pain, sleep, sleep disorders, fibromyalgia and autoimmune diseases. This has led people to self-medicate against such ailments for which there are currently no effective pharmacological treatments.

Delta-8 THC, a chemical cousin of delta-9

Delta-8 THC is found in very small quantities in the cannabis plant. The delta-8 THC that is widely marketed in the U.S. is a derivative of hemp CBD.

Delta-8 THC binds to CB1 receptors less strongly than delta-9 THC, which is what makes it less psychoactive than delta-9 THC. People who seek delta-8 THC for medicinal benefits seem to prefer it over delta-9 THC because delta-8 THC does not cause them to get very high.

However, delta-8 THC binds to CB2 receptors with a similar strength as delta-9 THC. And because activation of CB2 plays a critical role in suppressing inflammation, delta-8 THC could potentially be preferable over delta-9 THC for treating inflammation, since it is less psychoactive.

There are no published clinical studies thus far on whether delta-8 THC can be used to treat the clinical disorders such as chemotherapy-induced nausea or appetite stimulation in HIV/AIDS that are responsive to delta-9 THC. However, animal studies from our laboratory have shown that delta-8 THC is also effective in the treatment of multiple sclerosis.

The sale of delta-8 THC, especially in states where marijuana is illegal, has become highly controversial. Federal agencies consider all compounds isolated from marijuana or synthetic forms, similar to THC, Schedule I controlled substances, which means they currently have no accepted medical use and have considerable potential for abuse.

However, hemp manufacturers argue that delta-8 THC should be legal because it is derived from CBD isolated from legally cultivated hemp plants.

The emergence of delta-10 THC

Delta-10 THC, another chemical cousin to delta-9 and delta-8, has recently entered the market.

Scientists do not yet know much about this new cannabinoid. Delta-10 THC is also derived from hemp CBD. People have anecdotally reported feeling euphoric and more focused after consuming delta-10 THC. Also, anecdotally, people who consume delta-10 THC say that it causes less of a high than delta-8 THC.

And virtually nothing is known about the medicinal properties of delta-10 THC. Yet it is being marketed in similar ways as the other more well-studied cannabinoids, with claims of an array of health benefits.

The future of cannabinoid derivatives

Research and clinical trials using marijuana or delta-9 THC to treat many medical conditions have been hampered by their classification as Schedule 1 substances. In addition, the psychoactive properties of marijuana and delta-9 THC create side effects on brain functions; the high associated with them causes some people to feel sick, or they simply hate the sensation. This limits their usefulness in treating clinical disorders.

In contrast, we feel that delta-8 THC and delta-10 THC, as well as other potential cannabinoids that could be isolated from the cannabis plant or synthesized in the future, hold great promise. With their strong activity against the CB2 receptors and their lower psychoactive properties, we believe they offer new therapeutic opportunities to treat a variety of medical conditions.

Prakash Nagarkatti, Professor of Pathology, Microbiology and Immunology, University of South Carolina and Mitzi Nagarkatti, Professor of Pathology, Microbiology and Immunology, University of South Carolina

This article is republished from The Conversation under a Creative Commons license.