The pandemic has immersed us faster and deeper in immersive communication technologies. It’s a disrupted, confusing, sometimes exhausting world — but shifting both the tech and our expectations might make it a better one.

I am sitting in a darkened room, listening to upbeat music of the type often used at tech conferences to make attendees feel they are part of Something Big, waiting in eager anticipation for a keynote speaker to appear.

Bang on time, virtual communication expert Jeremy Bailenson arrives on the digital stage. He is here at the American Psychological Association’s November meeting, via a videoconferencing app, to somewhat ironically talk about Zoom fatigue and ways to battle it. “In late March, like all of us, I was sheltered in place,” Bailenson tells his invisible tele-audience. “After a week long of being on video calls for eight or nine hours a day, I was just exhausted.”

One of the pandemic’s many impacts was to throw everyone suddenly online — not just for business meetings but also for everything from birthday parties to schooling, romantic dates to science conferences. While the Internet thankfully has kept people connected during lockdowns, experiences haven’t been all good: There have been miscommunications, parties that fall flat, unengaged schoolkids.

Many found themselves tired, frustrated or feeling disconnected, with researchers left unsure as to exactly why and uncertain how best to tackle the problems. Sensing this research gap, Bailenson, director of Stanford University’s Virtual Human Interaction Lab, and colleagues quickly ramped up surveys to examine how people react to videoconferencing, and this February published a “ Zoom Exhaustion & Fatigue Scale” to quantify peoples’ different types of exhaustion (see box). They found that having frequent, long, rapid-fire meetings made people more tired; many felt cranky and needed some alone time to decompress.

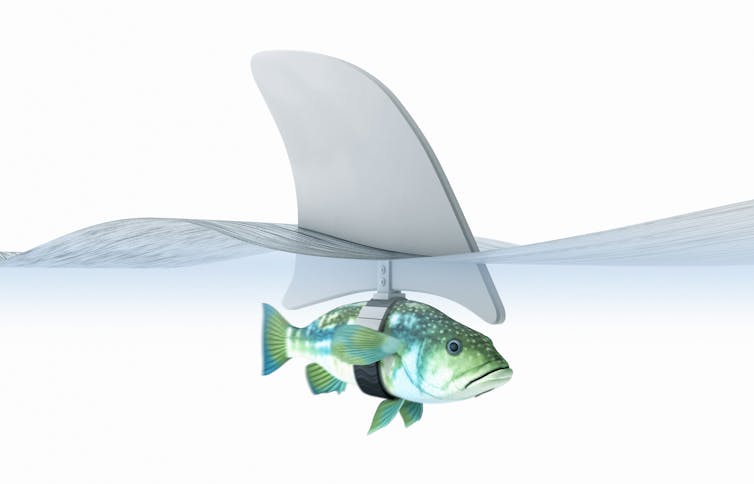

This reality comes in contrast to the rosy views painted by many enthusiasts over the years about the promises of tech-mediated communication, which has evolved over recent decades from text-based chat to videoconferencing and the gathering of avatars in virtual landscapes. The dream is to create ever more immersive experiences that allow someone to feel they are really in a different place with another person, through techniques like augmented reality (which projects data or images onto a real-life scene), to virtual reality (where users typically wear goggles to make them feel they are elsewhere), to full-blown systems that involve a user’s sense of touch and smell.

The vision is that we would all be sitting in holographic boardrooms by now; all university students should be blowing up virtual labs rather than physical ones; people should feel as comfortable navigating virtual worlds and friendships as in-person realities. On the whole, this hasn’t yet come to pass. Highly immersive technologies have made inroads in niche applications like simulation training for sports and medicine, along with the video gaming industry — but they aren’t mainstream for everyday communication. The online environment Second Life, launched in 2003, offered a parallel online world as a companion space to the physical one; it saw monthly active users drop from a million in 2013 to half that in 2018. Google Glass, which aimed to provide augmented reality for wearers of a special camera-enabled pair of glasses, launched in 2013 mostly to widespread mockery.

As Zoom fatigue has highlighted, the road to more immersive technologies for communication isn’t always a smooth one. But experts across fields from education to communication, computer science and psychology agree that deeper immersion still holds great promise for making people feel more connected, and they are aiming to help navigate the bumpy road to its best adoption. “I hope that no pandemic ever happens again, but if it does, I hope we have better technologies than we have now,” says Fariba Mostajeran, a computer scientist who studies human-computer interaction and virtual reality at Hamburg University. “For people who live alone, it has been really hard not to be able to hug friends and family, to feel people. I’m not sure if we can achieve that 10 years from now, but I hope we can.”

For distanced communication to live up to its full potential, “there will need to be an evolution,” Bailenson writes me, “both on the technology and on the social norms.”

Sudden shift

It takes a while for societies to adapt to a new form of communication. When the telephone was first invented, no one knew how to answer it: Alexander Graham Bell suggested that the standard greeting should be “Ahoy.” This goes to show not just that social use of technology evolves, but also that the inventors of that technology are rarely in the driver’s seat.

Email has danced between being extremely casual and being as formal as letter-writing as perceptions, expectations and storage space have shifted. Texting, tweeting and social media platforms like Facebook and Snapchat are all experiencing their own evolutions, including the invention of emojis to help convey meaning and tone. Ever since prehistoric people started scratching on cave walls, humanity has experimented with the best ways to convey thoughts, facts and feelings.

Some of that optimization is based on the logistical advantages and disadvantages of different platforms, and some of it is anchored in our social expectations. Experience has taught us to expect business phone calls to be short and sharp, for example, whereas we expect real-life visits with family and friends to accommodate a slow exchange of information that may last days. Expectations for video calls are still in flux: Do you need to maintain eye contact, as you would for an in-person visit, or is it OK to check your email, as you might do in the anonymity of a darkened lecture hall?

Travel often demarcates an experience, focusing attention and solidifying work-life boundaries — whether it’s a flight to a conference or a daily commute to the office. As the online world has sliced those rituals away, people have experimented with “fake commutes” (a walk around the house or block) to trick themselves into a similarly targeted mindset.

“For people who live alone, it has been really hard not to be able to hug friends and family.... I’m not sure if [technology] can achieve that 10 years from now, but I hope we can.”

FARIBA MOSTAJERANBut while the evolution of technology use is always ongoing, the pandemic threw it into warp speed. Zoom reported having 300 million daily meeting participants by June 2020, compared to 10 million in December 2019. Zoom itself hosted its annual Zoomtopia conference online-only for the first time in October 2020; it attracted more than 50,000 attendees, compared to about 500 in 2017.

Some might see this as evidence that the tech is, thankfully, ready to accommodate lockdown-related demands. But on the other side of the coin, people have been feeling exhausted and disrupted.

Visual creatures

Humans are adapted to detect a lot of visual signals during conversations: small twitches, micro facial expressions, acts like leaning into a conversation or pulling away. Based on work starting in the 1940s and 1950s, researchers have estimated that such physical signals made up 65 to 70 percent of the “social meaning” of a conversation. “Humans are pretty bad at interpreting meaning without the face,” says psychologist Rachael Jack of the University of Glasgow, coauthor of an overview of how to study the meaning embedded in facial expressions in the Annual Review of Psychology. “Phone conversations can be difficult to coordinate and understand the social messages.”

People often try, subconsciously, to translate the visual and physical cues we pick up on in real life to the screen. In virtual worlds that support full-bodied avatars that move around a constructed space, Bailenson’s work has shown that people tend to intuitively have their virtual representatives stand a certain distance from each other, for example, mimicking social patterns seen in real life. The closer avatars get, the more they avoid direct eye contact to compensate for invasion of privacy (just as people do, for example, in an elevator).

Yet many of the visual or physical signals get mixed or muddled. “It’s a firehose of nonverbal cues, yet none of them mean the thing our brains are trained to understand,” Bailenson said in his keynote. During videoconferencing, people are typically looking at their screens rather than their cameras, for example, giving a false impression to others about whether they are making eye contact or not. The stacking of multiple faces on a screen likewise gives a false sense of who is looking at whom (someone may glance to their left to grab their coffee, but on screen it looks like they’re glancing at a colleague).

And during a meeting, everyone is looking directly at everyone else. In physical space, by contrast, usually all eyes are on the speaker, leaving most of the audience in relative and relaxed anonymity. “It’s just a mind-blowing difference in the amount of eye contact,” Bailenson said; he estimates that it’s at least 10 times higher in virtual meetings than in person.

Research has shown that the feeling of being watched (even by a static picture of a pair of eyes) causes people to change their behavior; they act more as they believe they are expected to act, more diligently and responsibly. This sounds positive, but it also causes a hit to self-esteem, says Bailenson. In effect, the act of being in a meeting can become something of a performance, leaving the actor feeling drained.

For all these reasons, online video is only sometimes a good idea, experts say. “It’s all contextual,” says Michael Stefanone, a communications expert at the University of Buffalo. “The idea that everyone needs video is wrong.”

Research has shown that if people need to establish a new bond of trust between them (like new work colleagues or potential dating partners), then “richer” technologies (video, say, as opposed to text) are better. This means, says Stefanone, that video is important for people with no prior history — “zero-history groups” like him and me. Indeed, despite a series of emails exchanged prior to our conversation, I get a different impression of Stefanone over Zoom than I did before, as he wrangles his young daughter down for a nap while we chat. I instantly feel I know him a little; this makes it feel more natural to trust his expertise. “If you’re meeting someone for the first time, you look for cues of affection, of deception,” he says.

But once a relationship has been established, Stefanone says, visual cues become less important. (“Email from a stranger is a pretty lean experience. Email from my old friend from grade school is a very rich experience; I get a letter from them and I can hear their laughter even if I haven’t seen them in a long time.”) Visual cues can even become detrimental if the distracting downsides of the firehose effect, alongside privacy issues and the annoyance of even tiny delays in a video feed, outweigh the benefits. “If I have a class of 150 students, I don’t need to see them in their bedrooms,” says Stefanone. He laughs, “I eliminate my own video feed during meetings, because I find myself just staring at my hair.”

In addition to simply turning off video streams occasionally, Bailenson also supports another, high-tech solution: replacing visual feeds with an automated intelligent avatar.

The idea is that your face onscreen is replaced by a cartoon; an algorithm generates facial expressions and gestures that match your words and tone as you speak. If you turn off your camera and get up to make a cup of tea, your avatar stays professionally seated and continues to make appropriate gestures. (Bailenson demonstrates during his keynote, his avatar gesturing away as he talks: “You guys don’t know this but I’ve stood up…. I’m pacing, I’m stretching, I’m eating an apple.”) Bailenson was working with the company Loom.ai to develop this particular avatar plug-in for Zoom, but he says that specific project has since been dropped. “Someone else needs to build one,” he later tells me.

Such solutions could be good, says Jack, who studies facial communication cues, for teachers or lecturers who want visual feedback from their listeners to keep them motivated, without the unnecessary or misleading distractions that often come along with “real” images.

All together now

This highlights one of the benefits of virtual communication: If it can’t quite perfectly mimic real-life interaction, perhaps it can be better. “You take things out that you can’t take out in real life,” says Jack. “You can block people, for example.” The virtual landscape also offers the potential to involve more people in more activities that might otherwise be unavailable to them because of cost or location. Science conferences have seen massive increases in participation after being forced to thrust their events online. The American Physical Society meeting, for example, drew more than 7,200 registrants in 2020, compared with an average of 1,600 to 1,800 in earlier years.

In a November 2020 online gathering of the American Association of Anthropology, anthropologist and conference chair Mayanthi Fernando extolled the virtues of virtual conferences in her opening speech, for boosting not just numbers but also the type of people who were attending. That included people from other disciplines, people who would otherwise be unable to attend due to childcare issues, and people — especially from the Global South — without the cash for in-person attendance. Videoconferencing technologies also tend to promote engagement, she noted, between people of different ages, languages, countries and ranks. “Zoom is a great leveler; everyone is in the same sized box,” she said. (The same meeting, however, suffered from “bombers” dropping offensive material into chat rooms.)

Technology also offers huge opportunity for broadening the scope and possibilities of education. EdX, one of the largest platforms for massive open online courses (MOOCs), started 2020 with 80 million enrollments; that went up to 100 million by May. Online courses are often based around prerecorded video lectures with text-based online chat, but there are other options too: The Open University in the UK, for example, hosts OpenSTEM Labs that allow students to remotely access real scanning electron microscopes, optical telescopes on Tenerife and a sandbox with a Mars rover replica.

There is great potential for online-based learning that isn’t yet being realized, says Stephen Harmon, interim executive director of the Center for 21st Century Universities at Georgia Tech. “I love technology,” says Harmon. “But the tech we use [for teaching] now, like BlueJeans or Zoom, they’re not built for education, they’re built for videoconferencing.” He hopes to see further development of teaching-tailored technologies that can monitor student engagement during classes or support in-class interaction within small groups. Platforms like Engage, for example, use immersive VR in an attempt to enhance a student’s experience during a virtual field trip or meeting.

Full immersion

For many developers the ultimate goal is still to create a seamless full-immersion experience — to make people feel like they’re “really there.” Bailenson’s Virtual Human Interaction Lab at Stanford is state of the art, with a pricey setup including goggles, speakers and a moveable floor. Participants in his VR experiments have been known to scream and run from encounters with virtual earthquakes and falling objects.

There are benefits to full immersion that go beyond the wow factor. Guido Makransky, an educational psychologist at the University of Copenhagen, says that virtual reality’s ability to increase a person’s sense of presence, and their agency, when compared to passive media like watching a video or reading a book, is extremely important for education. “Presence really creates interest,” he says. “Interest is really important.” Plenty of studies have also shown how experiencing life in another virtual body (of a different age, for example, or race) increases empathy, he says. Makransky is now working on a large study to examine how experiencing the pandemic in the body of a more vulnerable person helps to improve willingness to be vaccinated.

But VR also has limitations, especially for now. Makransky notes that the headsets can be bulky, and if the software isn’t well designed the VR can be distracting and add to a student’s “cognitive load.” Some people get “cyber sickness” — nausea akin to motion sickness caused by a mismatch between visual and physical motion cues. For now, the burdens and distractions of immersive VR can make it less effective at promoting learning than, for example, a simpler video experience.

Mostajeran, who looks primarily at uses of VR for health, found in a recent study that a slideshow of forest snaps was more effective at reducing stress than an immersive VR forest jaunt. For now, she says, lower-immersion technology is fine or better for calming patients. But, again, that may be just because VR technology is new, unfamiliar and imperfect. “When it’s not perfect, people fall back on what they trust,” she says.

All technology needs to surpass a certain level of convenience, cost and sophistication before it’s embraced — it was the same for video calling. Video phones go much further back than most people realize: In 1936, German post offices hosted a public video call service, and AT&T had a commercial product on the market around 1970. But these systems were expensive and clunky and few people wanted to use them: They were too ahead of their time to find a market.

For distanced communication to live up to its full potential, “there will need to be an evolution, both on the technology and on the social norms.”

JEREMY BAILENSONBoth Mostajeran and Makransky say they’re impressed with how much VR technologies have improved in recent years, getting lighter, less bulky and wireless. Makransky says he was surprised by how easy it was to find people who already own VR headsets and were happy to participate in his new vaccination study — 680 volunteers signed up in just a few weeks. As the technology improves and more people have access to it and get comfortable with it, the studies and applications are expected to boom.

Whether that will translate to everyone using immersive VR for social and business meetings, and when, is up for debate. “We just missed it by a year or two, I think,” said Bailenson optimistically after his keynote presentation.

For now, the researchers say, the best way to get the most from communication media is to be aware of what you’re trying to achieve with it and adapt accordingly. People in long-distance relationships, for example, get value out of letting their cameras run nonstop, letting their partners “be in the room” with them even while they cook, clean or watch TV. Others, in the business world, aim for a far more directed and efficient exchange of information. Video is good for some of these goals; audio-only is best for others.

“This has been a heck of an experiment,” says Stefanone about the last year of online engagement. For all the pitfalls of social media and online work, he adds, there are definitely upsides. He, for one, won’t be jumping on any planes when the pandemic ends — he has proved he can do his academic job effectively from home while also spending time with his daughter. But it’s hard to know where the technology will ultimately take us, he says. “The way people adapt never follows the route we expect.”

This article is part of Reset: The Science of Crisis & Recovery , an ongoing Knowable Magazine series exploring how the world is navigating the coronavirus pandemic, its consequences and the way forward. Reset is supported by a grant from the Alfred P. Sloan Foundation.