Scientists have been chasing the dream of harnessing the reactions that power the Sun since the dawn of the atomic era. Interest, and investment, in the carbon-free energy source is heating up.

For the better part of a century now, astronomers and physicists have known that a process called thermonuclear fusion has kept the Sun and the stars shining for millions or even billions of years. And ever since that discovery, they’ve dreamed of bringing that energy source down to Earth and using it to power the modern world.

It’s a dream that’s only become more compelling today, in the age of escalating climate change. Harnessing thermonuclear fusion and feeding it into the world’s electric grids could help make all our carbon dioxide-spewing coal- and gas-fired plants a distant memory. Fusion power plants could offer zero-carbon electricity that flows day and night, with no worries about wind or weather — and without the drawbacks of today’s nuclear fission plants, such as potentially catastrophic meltdowns and radioactive waste that has to be isolated for thousands of centuries.

In fact, fusion is the exact opposite of fission: Instead of splitting heavy elements such as uranium into lighter atoms, fusion generates energy by merging various isotopes of light elements such as hydrogen into heavier atoms.

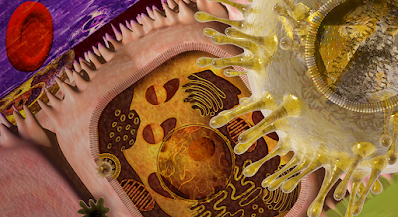

To make this dream a reality, fusion scientists must ignite fusion here on the ground — but without access to the crushing levels of gravity that accomplish this feat at the core of the Sun. Doing it on Earth means putting those light isotopes into a reactor and finding a way to heat them to hundreds of millions of degrees centigrade — turning them into an ionized “plasma” akin to the insides of a lightning bolt, only hotter and harder to control. And it means finding a way to control that lightning, usually with some kind of magnetic field that will grab the plasma and hold on tight while it writhes, twists and tries to escape like a living thing.

Both challenges are daunting, to say the least. It was only in late 2022, in fact, that a multibillion-dollar fusion experiment in California finally got a tiny isotope sample to put out more thermonuclear energy than went in to ignite it. And that event, which lasted only about one-tenth of a nanosecond, had to be triggered by the combined output of 192 of the world’s most powerful lasers.

Today, though, the fusion world is awash in plans for much more practical machines. Novel technologies such as high-temperature superconductors are promising to make fusion reactors smaller, simpler, cheaper and more efficient than once seemed possible. And better still, all those decades of slow, dogged progress seem to have passed a tipping point, with fusion researchers now experienced enough to design plasma experiments that work pretty much as predicted.

“There is a coming of age of technological capability that now matches up with the challenge of this quest,” says Michl Binderbauer, CEO of the fusion firm TAE Technologies in Southern California.

Indeed, more than 40 commercial fusion firms have been launched since TAE became the first in 1998 — most of them in the past five years, and many with a power-reactor design that they hope to have operating in the next decade or so. “‘I keep thinking that, oh sure, we’ve reached our peak,” says Andrew Holland, who maintains a running count as CEO of the Fusion Industry Association, an advocacy group he founded in 2018 in Washington, DC. “But no, we keep seeing more and more companies come in with different ideas.”

None of this has gone unnoticed by private investment firms, which have backed the fusion startups with some $6 billion and counting. This combination of new technology and private money creates a happy synergy, says Jonathan Menard, head of research at the Department of Energy’s Princeton Plasma Physics Laboratory in New Jersey, and not a participant in any of the fusion firms.

Compared with the public sector, companies generally have more resources for trying new things, says Menard. “Some will work, some won’t. Some might be somewhere in between,” he says. “But we’re going to find out, and that’s good.”

Granted, there’s ample reason for caution — starting with the fact that none of these firms has so far shown that it can generate net fusion energy even briefly, much less ramp up to a commercial-scale machine within a decade. “Many of the companies are promising things on timescales that generally we view as unlikely,” Menard says.

But then, he adds, “we’d be happy to be proven wrong.”

With more than 40 companies trying to do just that, we’ll know soon enough if one or more of them succeeds. In the meantime, to give a sense of the possibilities, here is an overview of the challenges that every fusion reactor has to overcome, and a look at some of the best-funded and best-developed designs for meeting those challenges.

Prerequisites for fusion

The first challenge for any fusion device is to light the fire, so to speak: It has to take whatever mix of isotopes it’s using as fuel, and get the nuclei to touch, fuse and release all that beautiful energy.

This means literally “touch”: Fusion is a contact sport, and the reaction won’t even begin until the nuclei hit head on. What makes this tricky is that every atomic nucleus contains positively charged protons and — Physics 101 — positive charges electrically repel each other. So the only way to overcome that repulsion is to get the nuclei moving so fast that they crash and fuse before they’re deflected.

This need for speed requires a plasma temperature of at least 100 million degrees C. And that’s just for a fuel mix of deuterium and tritium, the two heavy isotopes of hydrogen. Other isotope mixes would have to get much hotter — which is why “DT” is still the fuel of choice in most reactor designs.

But whatever the fuel, the quest to reach fusion temperatures generally comes down to a race between researchers’ efforts to pump in energy with an external source such as microwaves, or high-energy beams of neutral atoms, and plasma ions’ attempts to radiate that energy away as fast as they receive it.

The ultimate goal is to get the plasma past the temperature of “ignition,” which is when fusion reactions will start to generate enough internal energy to make up for that radiating away of energy — and power a city or two besides.

But this just leads to the second challenge: Once the fire is lit, any practical reactor will have to keep it lit — as in, confine these superheated nuclei so that they’re close enough to maintain a reasonable rate of collisions for long enough to produce a useful flow of power.

In most reactors, this means protecting the plasma inside an airtight chamber, since stray air molecules would cool down the plasma and quench the reaction. But it also means holding the plasma away from the chamber walls, which are so much colder than the plasma that the slightest touch will also kill the reaction. The problem is, if you try to hold the plasma away from the walls with a non-physical barrier, such as a strong magnetic field, the flow of ions will quickly get distorted and rendered useless by currents and fields within the plasma.

Unless, that is, you’ve shaped the field with a great deal of care and cleverness — which is why the various confinement schemes account for some of the most dramatic differences between reactor designs.

Finally, practical reactors will have to include some way of extracting the fusion energy and turning it into a steady flow of electricity. Although there has never been any shortage of ideas for this last challenge, the details depend critically on which fuel mix the reactor uses.

With deuterium-tritium fuel, for example, the reaction produces most of its energy in the form of high-speed particles called neutrons, which can’t be confined with a magnetic field because they don’t have a charge. This lack of an electric charge allows the neutrons to fly not only through the magnetic fields but also through the reactor walls. So the plasma chamber will have to be surrounded by a “blanket”: a thick layer of some heavy material like lead or steel that will absorb the neutrons and turn their energy into heat. The heat can then be used to boil water and generate electricity via the same kind of steam turbines used in conventional power plants.

Many DT reactor designs also call for including some lithium in the blanket material, so that the neutrons will react with that element to produce new tritium nuclei. This step is critical: Since each DT fusion event consumes one tritium nucleus, and since this isotope is radioactive and doesn’t exist in nature, the reactor would soon run out of fuel if it didn’t exploit this opportunity to replenish it.

The complexities of DT fuel are cumbersome enough that some of the more audacious fusion startups have opted for alternative fuel mixes. Binderbauer’s TAE, for example, is aiming for what many consider the ultimate fusion fuel: a mix of protons and boron-11. Not only are both ingredients stable, nontoxic and abundant, their sole reaction product is a trio of positively charged helium-4 nuclei whose energy is easily captured with magnetic fields, with no need for a blanket.

But alternative fuels present different challenges, such as the fact that TAE will have to get its proton-boron-11 mix to up fusion temperatures of at least a billion degrees Celsius, roughly 10 times higher than the DT threshold.

A plasma donut

The basics of these three challenges — igniting the plasma, sustaining the reaction, and harvesting the energy — were clear from the earliest days of fusion energy research. And by the 1950s, innovators in the field had begun to come up with any number of schemes for solving them — most of which fell by the wayside after 1968, when Soviet physicists went public with a design they called the tokamak.

Like several of the earlier reactor concepts, tokamaks featured a plasma chamber something like a hollow donut — a shape that allowed the ions to circulate endlessly without hitting anything — and controlled the plasma ions with magnetic fields generated by current-carrying coils wrapped around the outside of the donut.

But tokamaks also featured a new set of coils that caused an electric current to go looping around and around the donut right through the plasma, like a circular lightning bolt. This current gave the magnetic fields a subtle twist that went a surprisingly long way toward stabilizing the plasma. And while the first of these machines still couldn’t get anywhere close to the temperatures and confinement times a power reactor would need, the results were so much better than anything seen before that the fusion world pretty much switched to tokamaks en masse.

Since then, more than 200 tokamaks of various designs have been built worldwide, and physicists have learned so much about tokamak plasmas that they can confidently predict the performance of future machines. That confidence is why an international consortium of funding agencies has been willing to commit more than $20 billion to build ITER (Latin for “the way”): a tokamak scaled up to the size of a 10-story building. Under construction in southern France since 2010, ITER is expected to start experiments with deuterium-tritium fuel in 2035. And when it does, physicists are quite sure that ITER will be able to hold and study burning fusion plasmas for minutes at a time, providing a unique trove of data that will hopefully be useful in the construction of power reactors.

But ITER was also designed as a research machine with a lot more instrumentation and versatility than a working power reactor would ever need — which is why two of today’s best-funded fusion startups are racing to develop tokamak reactors that would be a lot smaller, simpler and cheaper.

First out of the gate was Tokamak Energy, a UK firm founded in 2009. The company has received some $250 million in venture capital over the years to develop a reactor based on “spherical tokamaks” — a particularly compact variation that looks more like a cored apple than a donut.

But coming up fast is Commonwealth Fusion Systems in Massachusetts, an MIT spinoff that wasn’t even launched until 2018. Although Commonwealth’s tokamak design uses a more conventional donut configuration, access to MIT’s extensive fundraising network has already brought the company nearly $2 billion.

Both firms are among the first to generate their magnetic fields with cables made of high-temperature superconductors (HTS). Discovered in the 1980s but only recently available in cable form, these materials can carry an electrical current without resistance even at a relatively torrid 77 Kelvins, or -196 degrees Celsius, warm enough to be achieved with liquid nitrogen or helium gas. This makes HTS cables much easier and cheaper to cool than the ones that ITER will use, since those will be made of conventional superconductors that need to be bathed in liquid helium at 4 Kelvins.

But more than that, HTS cables can generate much stronger magnetic fields in a much smaller space than their low-temperature counterparts — which means that both companies have been able to shrink their power plant designs to a fraction of the size of ITER.

As dominant as tokamaks have been, however, most of today’s fusion startups are not using that design. They’re reviving older alternatives that could be smaller, simpler and cheaper than tokamaks, if someone could make them work.

Plasma vortices

Prime examples of these revived designs are fusion reactors based on smoke-ring-like plasma vortices known as the field-reversed configuration (FRC). Resembling a fat, hollow cigar that spins on its axis like a gyroscope, an FRC vortex holds itself together with its own internal currents and magnetic fields — which means there’s no need for an FRC reactor to keep its ions endlessly circulating around a donut-shaped plasma chamber. In principle, at least, the vortex will happily stay put inside a straight cylindrical chamber, requiring only a light-touch external field to hold it steady. This means that an FRC-based reactor could ditch most of those pricey, power-hungry external field coils, making it smaller, simpler and cheaper than a tokamak or almost anything else.

In practice, unfortunately, the first experiments with these whirling plasma cigars back in the 1960s found that they always seemed to tumble out of control within a few hundred microseconds, which is why the approach was mostly pushed aside in the tokamak era.

Yet the basic simplicity of an FRC reactor never fully lost its appeal. Nor did the fact that FRCs could potentially be driven to extreme plasma temperatures without flying apart — which is why TAE chose the FRC approach in 1998, when the company started on its quest to exploit the 1-billion-degree proton-boron-11 reaction.

Binderbauer and his TAE cofounder, the late physicist Norman Rostoker, had come up with a scheme to stabilize and sustain the FRC vortex indefinitely: Just fire in beams of fresh fuel along the vortex’s outer edges to keep the plasma hot and the spin rate high.

It worked. By the mid-2010s, the TAE team had shown that those particle beams coming in from the side would, indeed, keep the FRC spinning and stable for as long as the beam injectors had power — just under 10 milliseconds with the lab’s stored-energy supply, but as long as they want (presumably) once they can siphon a bit of spare energy from a proton-boron-11-burning reactor. And by 2022, they had shown that their FRCs could retain that stability well above 70 million degrees C.

With the planned 2025 completion of its next machine, the 30-meter-long Copernicus, TAE is hoping to actually reach burn conditions above 100 million degrees (albeit using plain hydrogen as a stand-in). This milestone should give the TAE team essential data for designing their DaVinci machine: a reactor prototype that will (they hope) start feeding p-B11-generated electricity into the grid by the early 2030s.

Plasma in a can

Meanwhile, General Fusion of Vancouver, Canada, is partnering with the UK Atomic Energy Authority to construct a demonstration reactor for perhaps the strangest concept of them all, a 21st-century revival of magnetized target fusion. This 1970s-era concept amounts to firing a plasma vortex into a metal can, then crushing the can. Do that fast enough and the trapped plasma will be compressed and heated to fusion conditions. Do it often enough and a more or less continuous string of fusion energy pulses back out, and you’ll have a power reactor.

In General Fusion’s current concept, the metal can will be replaced by a molten lead-lithium mix that’s held by centrifugal force against the sides of a cylindrical container spinning at 400 RPM. At the start of each reactor cycle, a downward-pointing plasma gun will inject a vortex of ionized deuterium-tritium fuel — the “magnetized target” — which will briefly turn the whirling, metal-lined container into a miniature spherical tokamak. Next, a forest of compressed-air pistons arrayed around the container’s outside will push the lead-lithium mix into the vortex, crushing it from a diameter of three meters down to 30 centimeters within about five milliseconds, and raising the deuterium-tritium to fusion temperatures.

The resulting blast will then strike the molten lead-lithium mix, pushing it back out to the rotating cylinder walls and resetting the system for the next cycle — which will start about a second later. Meanwhile, on a much slower timescale, pumps will steadily circulate the molten metal to the outside so that heat exchangers can harvest the fusion energy it’s absorbed, and other systems can scavenge the tritium generated from neutron-lithium interactions.

All these moving parts require some intricate choreography, but if everything works the way the simulations suggest, the company hopes to build a full-scale, deuterium-tritium-burning power plant by the 2030s.

It’s anybody’s guess when (or if) the particular reactor concepts mentioned here will result in real commercial power plants — or whether the first to market will be one of the many alternative reactor designs being developed by the other 40-plus fusion firms.

But then, few if any of these firms see the quest for fusion power as either a horse race or a zero-sum game. Many of them have described their rivalries as fierce, but basically friendly — mainly because, in a world that’s desperate for any form of carbon-free energy, there’s plenty of room for multiple fusion reactor types to be a commercial success.

“I will say my idea is better than their idea. But if you ask them, they will probably tell you that their idea is better than my idea,” says physicist Michel Laberge, General Fusion’s founder and chief scientist. “Most of these guys are serious researchers, and there’s no fundamental flaw in their schemes.” The actual chance of success, he says, is improved by having more possibilities. “And we do need fusion on this planet, badly.”

Editor’s note: This story was changed on November 2, 2023, to correct the amount of compression that General Fusion is aiming for in its reactor; it is 30 centimeters, not 10. The text was also changed to clarify that the blast of energy leads to the resetting of the magnetized target reactor.

This article originally appeared in Knowable Magazine, an independent journalistic endeavor from Annual Reviews.

Make a list and stick to it. Going shopping without a plan is a surefire way to make the trip to the grocery store less productive. Creating a list and identifying high-quality products that fit your needs can help you avoid impulse purchases. Plus, list-making can also help save money if you plan meals that let you use ingredients across multiple recipes for minimal waste.

Make a list and stick to it. Going shopping without a plan is a surefire way to make the trip to the grocery store less productive. Creating a list and identifying high-quality products that fit your needs can help you avoid impulse purchases. Plus, list-making can also help save money if you plan meals that let you use ingredients across multiple recipes for minimal waste. Take some shortcuts. Even if you aim to prepare fresh, home-cooked meals most nights, there are sure to be some evenings when you need to squeeze in a quick meal around work, school and extracurriculars. Having a few simple go-to recipes can help. For example, an easy stir-fry with fresh chicken and frozen veggies can shave off prep time while still providing a hot, well-balanced meal. If you’re meal prepping for the week, marinate pre-cut chicken thighs or legs in different spices and seasonings to make cooking throughout the week simpler. Or try an option like Perdue’s

Take some shortcuts. Even if you aim to prepare fresh, home-cooked meals most nights, there are sure to be some evenings when you need to squeeze in a quick meal around work, school and extracurriculars. Having a few simple go-to recipes can help. For example, an easy stir-fry with fresh chicken and frozen veggies can shave off prep time while still providing a hot, well-balanced meal. If you’re meal prepping for the week, marinate pre-cut chicken thighs or legs in different spices and seasonings to make cooking throughout the week simpler. Or try an option like Perdue’s